WILL DUPLICATE CONTENT PENALISE ME IN GOOGLE?

HOw much coPIED CONTENT CAN I USE? Can I copy Content? Can I copy Content?

As far as duplication is concerned the simple answer is none. You should certainly make sure that all your urls. page titles and most of your title tags and descriptions are bespoke.

And if you're not sure use can use a page title and description checker which you can use to check loads of urls within a site in one go. But then what about actual on page or in page text content. Or Body content as we like to call it! I get a few calls from people who think they can just copy their website and put it under different Domain name or URL, with NO substantial changes. NOTE: this DOES NOT WORK! And will usually penalise one or the other website, if you are trying to rank it in a search engine. The Google seacrh engine, being a product that relies on it's quality, which is defined by the search results it returns, is basically extremely vague on this topic. If you want to read what google has to say on this subject please click on this link It basically says NO when referring to duplicate content it is talking about large blocks of copied or similar text and content either within one website or across two or more websites. It also goes on to say that duplicate content is not grounds for action, and I quote, so sorry for the following duplication........................! "unless it appears that the intent of the duplicate content is to be deceptive and manipulate search engine results. If your site suffers from duplicate content issues, and you don't follow the advice listed above, we do a good job of choosing a version of the content to show in our search results." Google Webmaster. SO what it does not say is, will it penalise the whole site to some degree or will it jiust penalise that page or subsequent duplicated pages? Now just about every page within a website will have a certain level of duplication, take this page for example. The content to the far right is duplicated on most pages, the footer is duplicated across the whole site and certain parts of the header! Like wise much of the HTML coding is all duplicate and CSS file. So what is included then - just on page text? Well we are never going to know as this is top secret. but I hope to clarify some good a bad practices. So how much dulpication is good (or okay) and can we compare this accurately using a tool like similar page checker http://www.webconfs.com/similar-page-checker.php Well I have just checked several pages which I know for a fact are very similar on another website and this tool returned a 89% duplication. Which I thought wow that's high. However both pagerank No.1 and No.2 for their main keywords! So basically large duplicate content across websites can penalise us and affect our rankings but we can get away with quite a bit. Beware though Google may change the parameters on this ranking factor in future updates. So if you have a sudden ranking fall and you know you have large amounts of duplication, this is potentially why. And we all know that........ Google loves fresh content |

WHAT ABOUT DUPLICATE CONTENT WITHIN MY webSITE

So what about duplication across a number of pages within one single website?

Well I have just proved you can get away with 89% duplication, but this is because both pages have unique Titles and Descriptions but also all the titles <h1> <h2> and <h3> are all different. All the Image Alt Tags and Title Tags are different and the contact forms also. Also, although I am not sure about how important this is, all the inbound and outbound links are different. Now I have looked at another site from a company that I have been doing work for and I have found they to have 89% duplication across a number of pages. But there are only two of these pages that seem to be indexed by Google and not very highly by Google. Now this is where I have a few issues, the site is developed in wordpress and the pages that are being returned are basically extensions of the one page, over 36 pages of extensions. As they have that much content! What makes things worse is the website then uses a filter for specific topics, which does not seem to get indexed. However there are many more issues with the site from Duplicated Titles and Descriptions. To the way the pages are set up to return the same body of text. Which is substantial and would therefore in my mind would count as bulk copied or duplicated content. But they return 89% also, so does that mean it is o.k. UPDATE It would seem that this is a common issue with a few of the wordpress themes and plugins, Whereby within the site it returns searches, news, product, tags, categories and comment items under several different urls. either by using specific filters, or under assigning certain categories or by the way it archives items. Example where the same URL appears under several different url stings http://www.joebloggs.com/page-x/ http://www.joebloggs.com/product-category/page-x/ and http://www.joebloggs.com/article-category/page-x/ Well please check back in a 7-10 days and I hope to be able to shed more light on the subject, with some correction to some of the things which may be masking the issue further. MORE TO COME...... |

HOW MUCH CONTENT CAN I COPY?Looking for web hosting?As a SEO consultant in London I carry out consultancy work for individuals, sole traders, small companies and large businesses. Providing specialist SEO advice on several different levels in and around London. We help start up businesses, new businesses and established companies.

So what can an SEO Consultant do for you or your company?

|

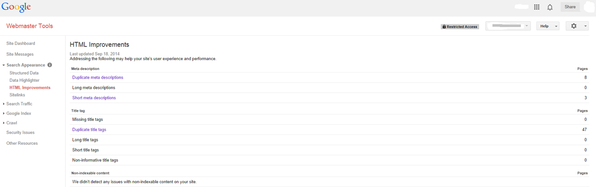

HOW GOOGLE WEBMASTER TELLS US ABOUT DUPLICATION

Google Webmaster does provide us with a tool to check some of this duplication. Under Search Appearance > go to HTML Improvements and here you will be shown short, long and duplicate titles and descriptions. There is a section here called non-indexable content which I have never seen any issues with, on any website. Is this perhaps the duplicate content flagger? Would be interesting to hear from people who have this section highlighted and perhaps the reason why.

HOW TO COMBAT DUPLICATE CONTENT

As a rule I will change content and adjust that I know is duplicated across a website and across pages. I do this in several ways and this is not only to help with potential duplication issues but to make sure the copied content is more applicable to the keywords of the page I have copied the content to. This may involve just changing the Title and or the Alt Tag of the image, if used! But also I will change some of the actual content to include more of the keywords for that page. Which in turn should also helop with ranking.

TOP WAYS TO AVOID DUPLICATE CONTENT ON WEBSITES

Avoiding duplicate content on websites is crucial for maintaining search engine optimization (SEO) and providing a good user experience. Here are some strategies to help you avoid duplicate content:

TOP WAYS TO AVOID DUPLICATE CONTENT ON WEBSITES

Avoiding duplicate content on websites is crucial for maintaining search engine optimization (SEO) and providing a good user experience. Here are some strategies to help you avoid duplicate content:

- Create Unique Content: The most effective way to avoid duplicate content is to create original, unique content for each page of your website. This includes product descriptions, blog posts, and other textual content.

- Canonical URLs: Use canonical tags to indicate the preferred version of a page when there are multiple URLs with similar content. This tells search engines which version of the page should be indexed and displayed in search results.

- 301 Redirects: If you have multiple URLs that point to the same content, set up 301 redirects to redirect users and search engines to the preferred URL. This consolidates the authority of inbound links and ensures that only one version of the content is indexed.

- Parameter Handling: If your website uses parameters in URLs (e.g., session IDs or tracking parameters), make sure to configure your website to handle them properly. Use tools like Google Search Console to specify which parameters should be ignored by search engines.

- Use Rel=“Noindex”: For pages that you don’t want indexed by search engines (such as duplicate or thin content pages), use the meta robots tag with the "noindex" directive. This prevents search engines from including those pages in their index.

- Avoid Scraped Content: Avoid using content scraped from other websites, as this can result in duplicate content issues. Always create original content or properly attribute and use content from other sources.

- Syndicated Content: If you syndicate content from other websites, use canonical tags or noindex directives to avoid duplicate content penalties. Ensure that you have permission to use the content and follow best practices for syndication.

- Consolidate Similar Pages: If you have multiple pages with similar content, consider consolidating them into a single, comprehensive page. This helps to avoid diluting your site’s authority and reduces the risk of duplicate content.

- Check for Scrapers: Regularly monitor your website for instances of scraped content by using tools like Copyscape or Google Alerts. If you find scraped content, take action to have it removed or properly attributed.

- Monitor Your Website: Regularly monitor your website’s performance in search engine results pages (SERPs) and check for duplicate content issues using tools like Google Search Console. Address any issues promptly to maintain your website’s SEO performance.

CAN I USE content that has been Produced by AI?

Using content that has been produced by an A.I. Robot is a question that I get frequently asked about. Ranging from can I use ai generated content for my website or my blog or social media pages? And I have done numerous trials and tests using AI generate content in various forms.

I was also part of a worldwide SEO Competition where one of the competitors openly used AI generated content to create a website and in some way prove you could rank highly in the Google search engine using AI generated content. They did not win but the website ranked fairly highly overall in the competition. But what we don't know is was that down to their good or even bad SEO techniques?

My rule of thumb when using AI generate content.

Read through the content and make sure it is written in your voice, using the words and phrases that you would use. Sometime AI generated content does read like a robot has written it. And if you are scared or sceptical about using AI generated content then, either don;t or make sure you edit it and ensure you change at leaset one work per sentence for good measure.

I was also part of a worldwide SEO Competition where one of the competitors openly used AI generated content to create a website and in some way prove you could rank highly in the Google search engine using AI generated content. They did not win but the website ranked fairly highly overall in the competition. But what we don't know is was that down to their good or even bad SEO techniques?

My rule of thumb when using AI generate content.

Read through the content and make sure it is written in your voice, using the words and phrases that you would use. Sometime AI generated content does read like a robot has written it. And if you are scared or sceptical about using AI generated content then, either don;t or make sure you edit it and ensure you change at leaset one work per sentence for good measure.

Artificial Intelligence COntent Generators

It seems like every week a new AI content generator is coming out and now there are even image generators. Having not tried them all and also having not compared each of them in terms or results I don;t know if any of them are SEO friendly. Some certainly produce similar and near similar results for the same or similar search terms. And there in maybe where the problem lies.

Here is a list of a few of the currently most popluar AI Robot generated content tools in no spefific order:

Here is a list of a few of the currently most popluar AI Robot generated content tools in no spefific order:

- CopyAI, Inc.

- Rytr

- ChatGPT

- Writesonic

- Jasper AI

- Copysmith

- Jasper

- Frase

- Scalenut

- Writer

- Anyword

- Article Forge